Blog

An LLM does not need to understand MCP

August 6, 2025 - Roy Derks

Model Context Protocol (MCP) has become the standard for tool calling when building agents, but contrary to popular belief, your LLM does not need to understand MCP. You might have heard about the term "context engineering"; where you, as the person interacting with an LLM, are responsible for providing the right context to help it answer your questions. To gather this context, you can use tool calling to give the LLM access to a set of tools it can use to fetch information or take actions.

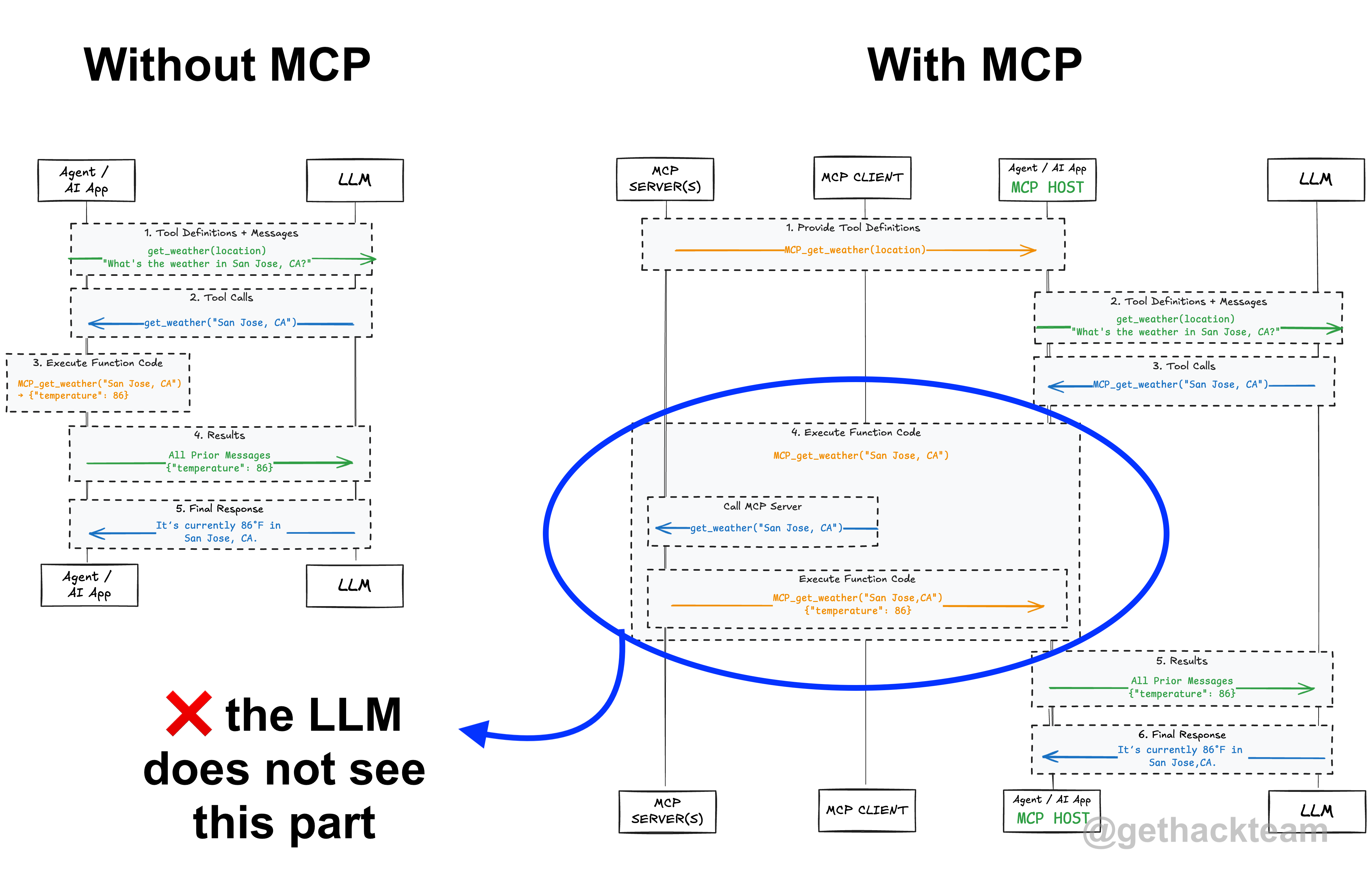

MCP helps by standardizing how your agent connects to these tools. But to your LLM, there’s no difference between “regular” tool calling and using a standard like MCP. It only sees a list of tool definitions, it doesn’t know or care what’s happening behind the scenes. And that’s a good thing.

By using MCP you get access to thousands of tools, without writing custom integration logic for each one. It heavily simplifies setting up an agentic loop that involves tool calling, often with almost zero development time. You, the developer, are responsible for calling the tools. The LLM only generates a snippet of what tool(s) to call and with which input parameters.

In this blog post, I’ll break down how tool calling works, what MCP actually does, and how both relate to context engineering.

Tool Calling

LLMs understand the concept of tool calling, sometimes also called tool use or function calling. You provide a list of tool definitions as part of your prompt. Each tool includes a name, description, and expected input parameters. Based on the question and available tools, the LLM may generate a call.

VIDEO: What is Tool Calling? Connecting LLMs to Your Data

VIDEO: What is Tool Calling? Connecting LLMs to Your Data

But here’s the important part: LLMs don’t know how to use tools. They don’t have native tool calling support. They just generate text that represents a function call.

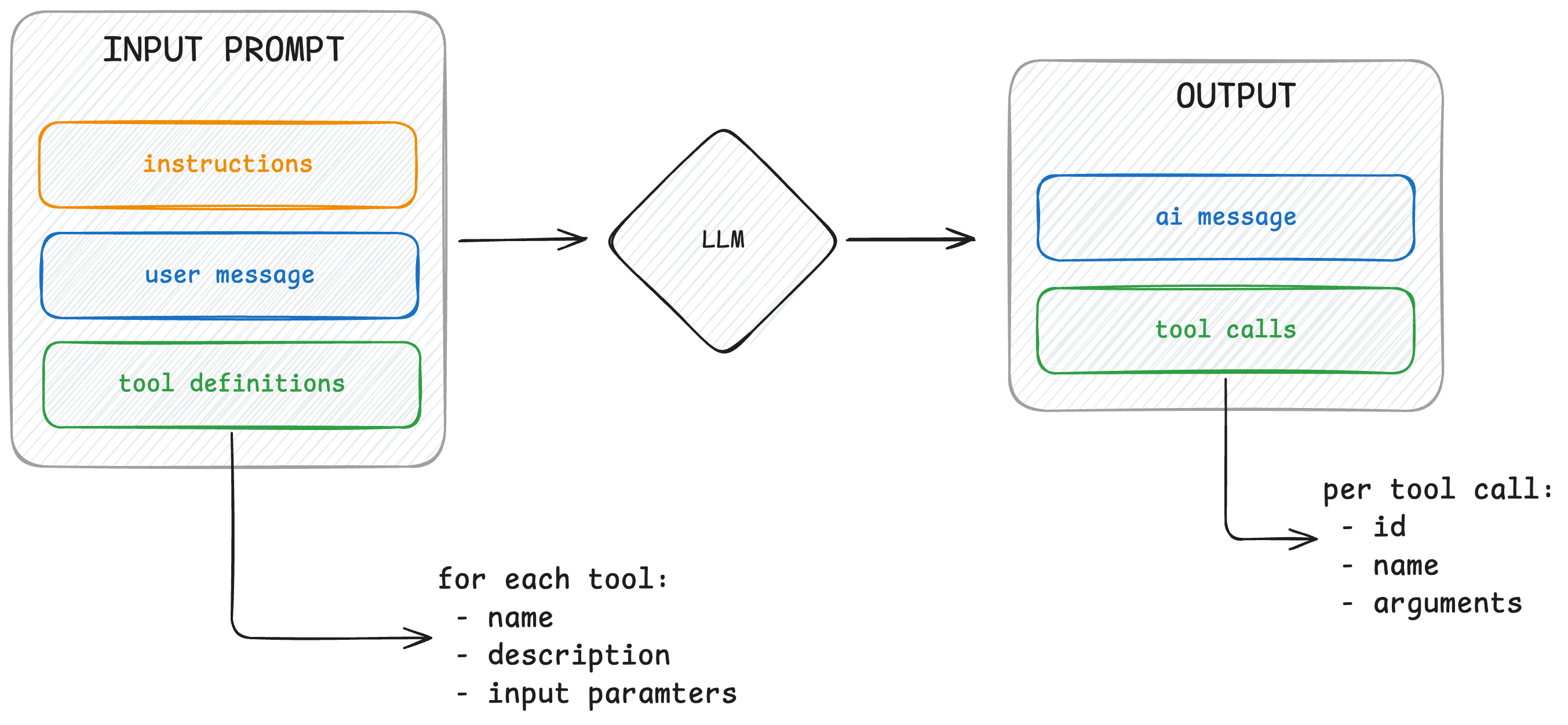

Input and output when interacting with a LLM

Input and output when interacting with a LLMIn the diagram above, you can see what the LLM actually sees: a prompt made up of instructions, previous user messages, and a list of available tools. Based on that, the LLM generates a text response which might include a tool that your system should call. It doesn’t understand tools in a meaningful way, it’s just making a prediction.

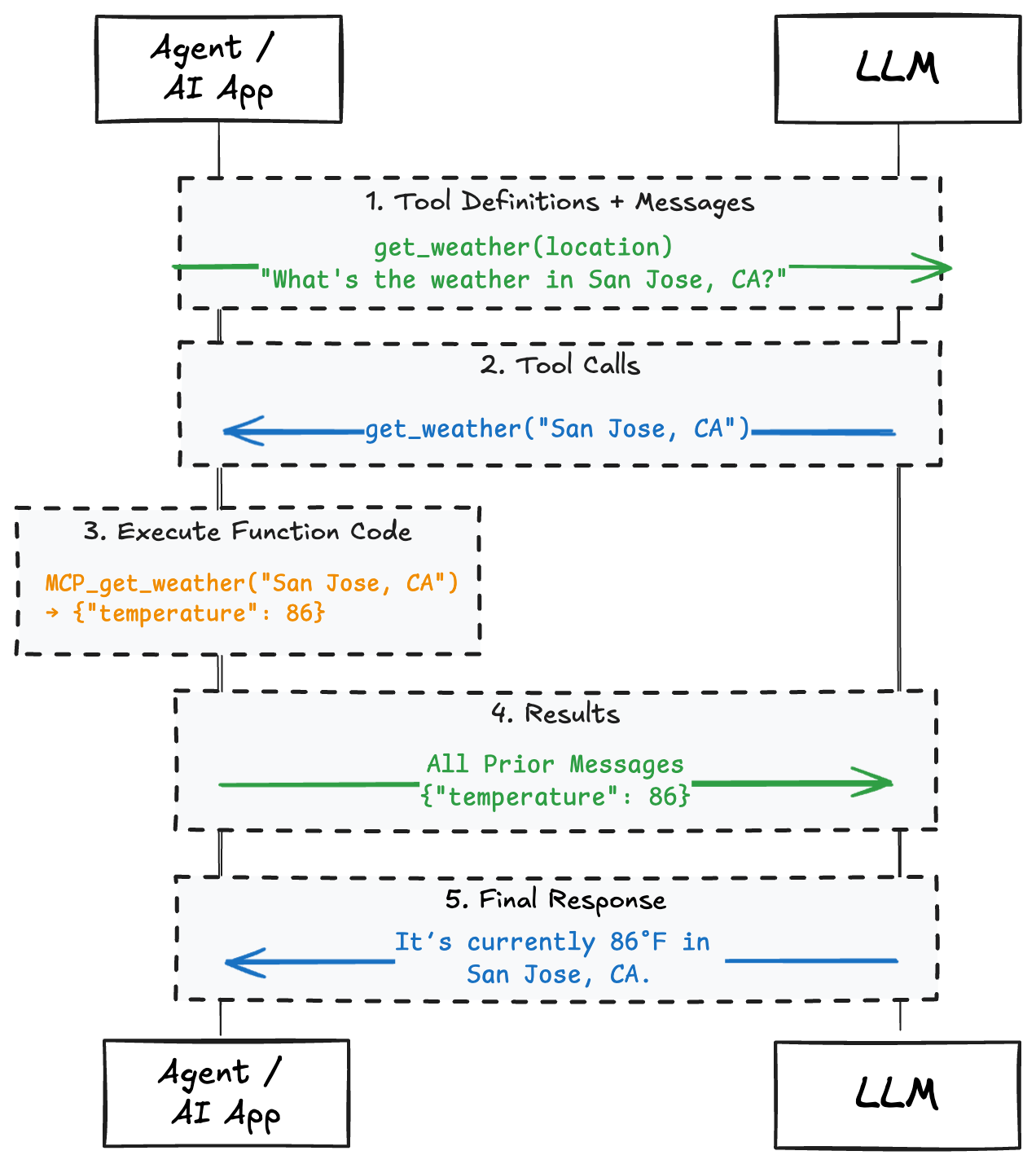

Let's look at a more practical use case. For example, if you provide a tool called get_weather that takes a location as input, and then ask the model: "What’s the weather in San Jose, CA?" it might respond with:

{ "name": "get_weather", "input": { "location": "San Jose, CA" } }

The LLM is able to generate that snippet based on the context it was provided with, as you can see in the diagram below. The LLM doesn’t know how to call the get_weather tool, nor does it need to. Your agentic loop, or agentic application, is responsible for taking this output and making the actual API call or function invocation. It parses the generated tool name and inputs, runs the tool, and passes the result back to the LLM as a new message.

Tool Calling flow interaction with a LLM

Tool Calling flow interaction with a LLMThis separation of concerns is important. The LLM just generates predictions and your system handles the execution. And that brings us to where MCP fits in.

Model Context Protocol (MCP)

Model Context Protocol, or MCP, is a way to standardize how your agent connects to data sources like tools, prompts, resources, and samples. Right now, MCP is best known for simplifying the tools side of that equation. Instead of manually writing code for each tool in a custom format, MCP defines a consistent schema and communication pattern. Think of it as a universal adapter (like USB-C) for tooling.

VIDEO: What is MCP? Integrate AI Agents with Databases & APIs

VIDEO: What is MCP? Integrate AI Agents with Databases & APIs

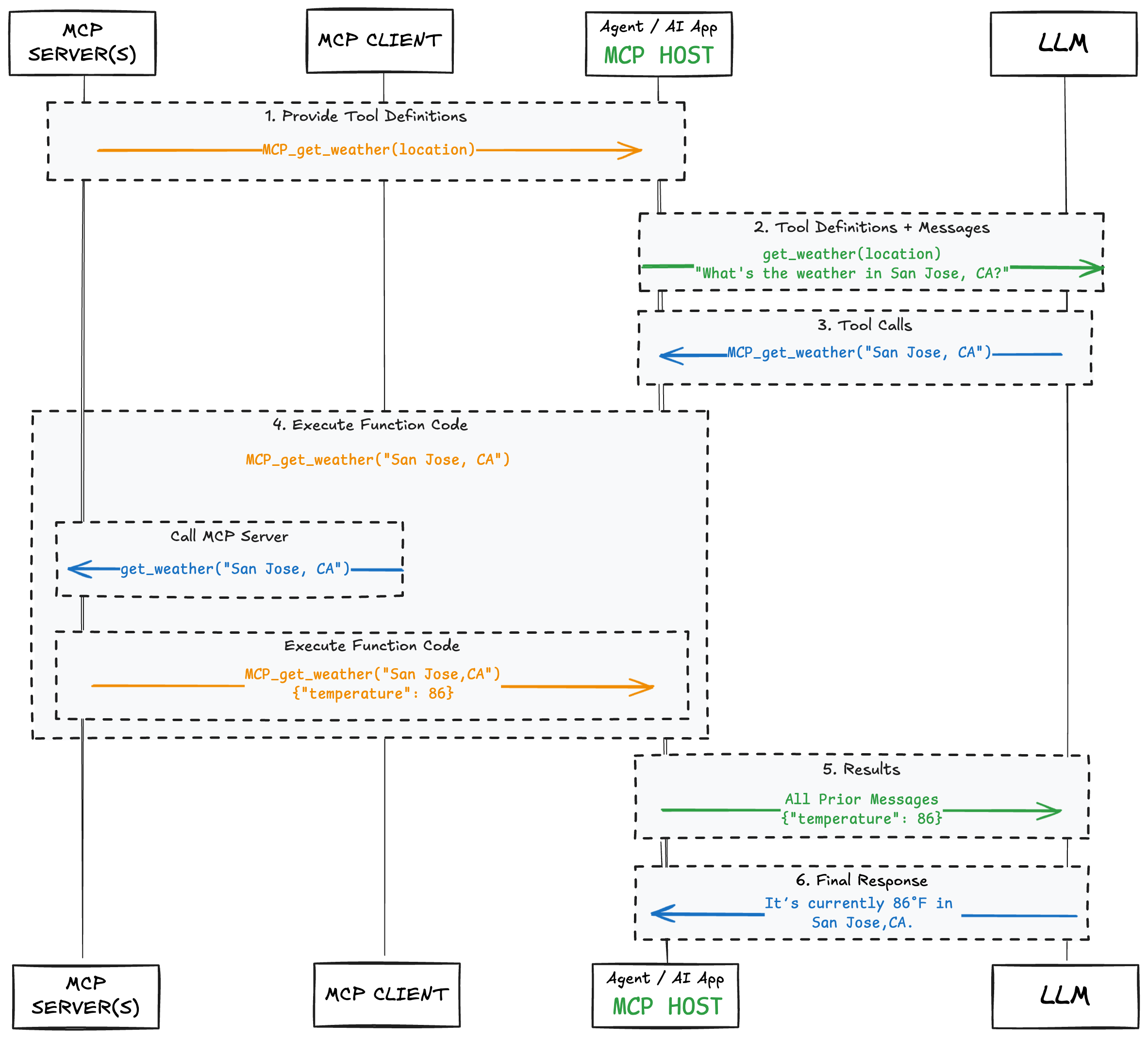

MCP usually involves three components: a host application, an MCP client, and one or more MCP servers. The host might be a chat app or IDE (like Cursor) that includes an MCP client capable of connecting to different servers. These servers expose tools, prompts, samples, or resources.

The way you interact with the LLM doesn’t change. What changes is how the tools are surfaced to it. The agentic application talks to the MCP client, which talks to the right server. Tools are described in a format the LLM can use.

Tool Calling flow interaction with a LLM and MCP

Tool Calling flow interaction with a LLM and MCPFor the same question, "What’s the weather in San Jose, CA?", the LLM will still get the same list of tools. And based on that list it will tell you what tool to call, how that tool is called is up to the developer. When using MCP, that tool will be called using MCP.

The benefit here isn’t for the LLM, it’s for you as the developer. MCP helps manage complexity of working with many different tools as your agent grows. It makes it easier to reuse tools across projects, enforce consistent formats, and plug into new systems without rewriting everything.

But the LLM will never know you are using MCP, unless you are letting it know in the system prompt of tool definitions. You, the developer, is responsible for calling the tools. The LLM only generates a snippet of what tool(s) to call with which input parameters.

Next, let’s look at how this fits into the bigger picture of context engineering, and why abstraction layers like MCP make things easier for humans, not models.

Context Engineering

Context engineering is about giving your LLM the right inputs so it can generate useful outputs. That sounds simple, but it’s actually one of the most important parts of building effective AI systems.

When you ask a model a question, you’re really giving it a prompt -- a block of text it uses to predict the next block of text. The quality of that prompt directly affects the quality of the response.

This is where tools come in. Sometimes the model doesn’t have enough context to answer a question well. Maybe it needs real-time data, access to user profiles, or the ability to take action on behalf of the user. Tool calling lets you solve that by giving the model access to external systems, as you learned in this blog post.

But again, the model doesn’t need to know how those tools work. It just needs to know that they exist, what they’re for, and how to call them. That’s where context engineering meets tool design, you’re crafting a set of tool definitions that serve as part of the model’s prompt.

Tool Calling as seen by a LLM

Tool Calling as seen by a LLMMCP makes that process cleaner and more repeatable. Instead of hardcoding tools or writing ad hoc wrappers, you define a structured interface once and expose it through MCP. The LLM still sees the same types of tool definitions, but now they’re easier to maintain and scale.

So in the end, MCP is a tool for us developers, not for the LLM. It helps us build more reliable, modular systems. And it helps us focus on context engineering without reinventing the plumbing every time.

Where to go from here?

If you found this tutorial helpful, don’t forget to share it with your network. For more content on AI and web development, subscribe to my YouTube channel and connect with me on LinkedIn, X or Bluesky.